[youtube eXy2oX56WAA]

CHAPEL HILL, NC–The computer simulations created by Dinesh Manocha, Ming Lin and their graduate students in the UNC Chapel Hill computer science department display data with realism and creativity.

But until recently, their interfaces to the simulations—mouse, Joybox game controller and haptic feedback—were nothing out of the ordinary. That changed when Manocha, Matthew Mason Distinguished Professor of computer science, and Lin, Beverly Long Distinguished Professor of computer science discovered RENCI’s multi-touch visualization table.

The 42 x 46-inch multi-touch table, built and deployed by RENCI researchers, uses an architecture of commodity-level components and custom software to create a high-resolution interactive display for analyzing, visualizing and interacting with data. The table uses four rear projection cameras capable of 1,000 x 1,000 pixel touch resolution at 60 – 90 frames per second. A 3.2 GHz quad-core Intel Xeon computer drives the system.

The multi-touch system allows a user or group of users to intuitively interact with their data, applications and peripherals in much the same way they use hand-held devices like the iPhone. Visual representations of data are moved and resized by specified touches and gestures. Users drill down into the data and execute commands the same way, but on a much larger surface.

For Manocha and students Sean Curtis, Stephen J. Guy and Sachin Patil, the table enables a research system designed to understand the behavior of large crowds. The research group uses new algorithms developed by the computer science department’s GAMMA group to create simulations of a large crowd moving through a public space. The simulation looks like one of the popular virtual worlds, such as Second Life. The researchers aim to show how these simulations can be used to plan evacuations of large spaces, direct traffic flow in real-time or understand the social dynamics of large crowds in order to do better urban planning and better design of new buildings.

Before using the RENCI multi-touch table, members of the research team used conventional point and click technology to reroute or direct portions of their crowd to different exits on a simulated convention center exhibit floor. They would select a portion of the crowd, click and drag to study how the crowd would move when exits were blocked or to avoid bottlenecks.

“I visited RENCI and saw the table and thought it was a more natural way to work with the data,” said Manocha. “You can direct the system using any number of gestures and you can select your data with much more precision because you are using your fingers instead of a mouse. This multi-touch interface offers many new possibilities to design intuitive interfaces.”

[youtube lfyneib5UQE]

The table was built at RENCI’s main office in Chapel Hill and moved to a lab in Sitterson Hall on the UNC campus in November so that it could be used daily with computer science research projects. In November, Manaocha and his students presented a demonstration of the crowd simulation live from their campus lab to the RENCI exhibit at SC09, the annual high performance computing, networking and analysis, in Portland, OR.

Virtual music maker

Another project that uses the table involves sound synthesis, the process of generating sounds using a set of algorithms, which allows the researchers to design new virtual musical instruments. The computer-generated audio can sound natural, like a musical instrument for example, or it can be a new electronic creation. The process of using simulation to produce sound or to trace its path helps to develop more realistic audio in virtual environments and gives architects a better tool for conducting acoustics analysis.

So far, Ming Lin and her graduate student Zhimin Ren have developed a virtual xylophone, which they “play” on the multi-touch table. Playing involves hitting the simulated xylophone using makeshift mallets. In a display of grad student resourcefulness, Zhimin made the mallets by attaching round rubber cat toys to the ends of fondue forks.

Putting the crowd simulation and sound synthesis work on the multi-touch table involved working closely with RENCI Senior Visualization Engineer Jason Coposky, who programmed a wide range of gestures and touches for use on the table.

“Jason gave us the infrastructure,” said Guy, one of the graduate students involved in the crowd simulation project. “He provided the gestures and we gave them meaning in terms of directing the crowd simulation.”

Currently, 1,025 virtual people are part of the crowd simulation and the researchers are developing new algorithms that will let them simulate larger crowds and render movement more realistically. The work has piqued the interest of the U.S. military and homeland security agencies, said Manocha, which need to figure out effective and safe methods for moving troops through hostile environments and for managing crowds during emergencies.

As for the sound synthesis work, the xylophone is only a start. Zhimin and Lin are designing several kinds of drums, and the work could lead to development of a new generation of virtual instruments that are used through a natural interface.

“We can generate the sound of one instrument now, but if we can generate many instruments, we could have a whole orchestra,” said Zhimin.

Beethoven on the multi-touch table? Or maybe you’d prefer the Rolling Stones? Multi-touch technology offers multiple possibilities.

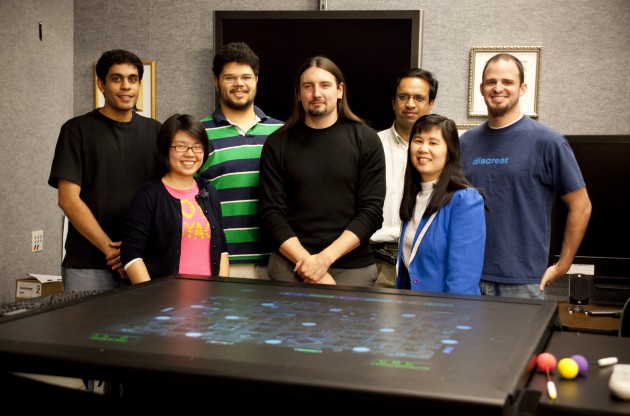

From left to to right: UNC grad students Sachin Patil, Zhimin Ren and Stephen J. Guy, RENCI’s Jason Coposky, UNC faculty member Dinesh Manocha and Ming C. Lin, and UNC grad student Sean Curtis

Researchers:

Ming Lin

Dinesh Manocha

Sean Curtis

Stephen J. Guy

Sachin Patil

Zhimin Ren

Funding: This research is supported by Army Research Office, Intel, the National Science Foundation and the US Army Research, Development and Engineering Command.