Imageomics Institute will advance computational methods for studying Earth’s biodiversity

RENCI has been named as a partner on an ambitious new effort to use images of living organisms as the basis for understanding biological processes of life on Earth. The project, to be led by faculty from The Ohio State University’s Translational Data Analytics Institute, has been awarded a $15 million grant from the National Science Foundation as part of NSF’s Harnessing the Data Revolution initiative.

The new entity, which will be called the Imageomics Institute, aims to establish imageomics as a new field of study that has the potential to transform biomedical, agricultural and basic biological sciences. Similar to genomics before it, which applied computation to the study of the human genome, imageomics will leverage computer science to help scientists extract meaning from an otherwise unwieldy amount of natural image data.

“There are many more species out there than scientists have been able to study in-depth,” said Jim Balhoff, a Senior Research Scientist at RENCI who will lead the RENCI component of the project. “If we can leverage machine learning to interpret images of living organisms, that would provide a scalable way to process large amounts of information about species, complementing the work of trained wildlife biologists.”

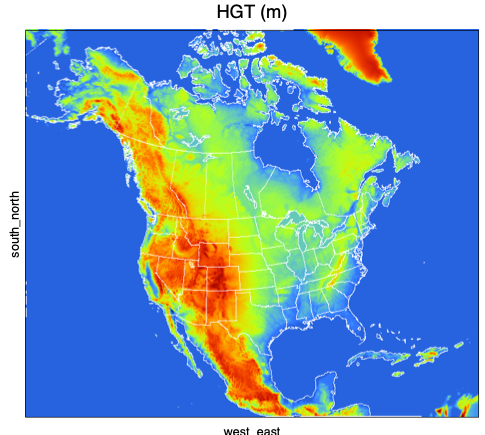

The Institute’s scientists will apply machine learning techniques to large collections of digital images from museums, labs and other institutions, as well as photos taken by scientists in the field, camera traps, drones and even members of the public who have uploaded their images to platforms such as eBird, iNaturalist and Wildbook. By training algorithms to extract biologically meaningful information from these images, researchers aim to generate new knowledge about organisms and species, including insights about how they evolve and interact within ecosystems.

Critical to this effort is the ability to categorize features of living organisms with standardized, vocabularies, known as a bio-ontologies, that can be “understood” by computers. Having served as a key contributor on the Phenoscape team for several previous NSF-funded projects, Balhoff is steeped in the art of encoding biological information in computable ways.

“There’s a lot of work going on with machine learning, and one of the key pieces of this project is to develop ways to incorporate ontology-based knowledge into machine learning processes,” said Balhoff. “We’re providing expertise in bio-ontologies to incorporate what we know about anatomical relationships into this image analysis system.”

This approach could ultimately enable a computer to identify key features in an image, such as an eye, mouth or dorsal fin, and then use automated reasoning to check that the interpretation makes anatomical sense. Repeating this process for large collections of images can give scientists a powerful platform for investigating new or previously understudied species or help them better understand the relationships between organisms.

As an inaugural institute for data-intensive discovery in science and engineering within NSF’s Harnessing the Data Revolution initiative, the Imageomics Institute will be part of a broader effort to form a national collaborative research network dedicated to computation-enabled discovery.

In addition to The Ohio State University and RENCI, the project will involve biologists and computer scientists from Tulane University, Virginia Tech, Duke University, and Rensselaer Polytechnic Institute; senior personnel from Ohio State, Virginia Tech and six additional institutions; and collaborators from more than 30 universities and organizations around the world.